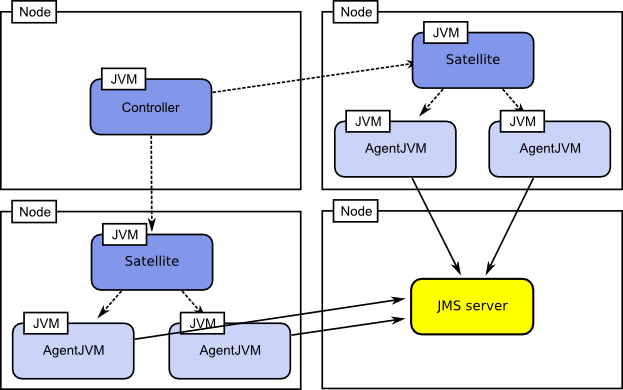

FIGURE 1: SPECjms2007 SUT layout

SPECjms2007 is a messaging benchmark designed to measure the scalability and performance of JMS Servers based on the JMS 1.1 Specification.

This document is a practical guide for setting up and running the SPECjms2007 benchmark. It discusses some, but not all, of the rules and restrictions pertaining to SPECjms2007. Before running the benchmark, we strongly recommend that you read the complete SPECjms2007 Run and Reporting Rules contained in the SPECjms2007 Kit. For an overview of the benchmark architecture, see the SPECjms2007 Design Document also contained in the SPECjms2007 Kit.

The SPECjms2007 Kit refers to the complete kit provided for running the SPECjms2007 benchmark. The SPECjms2007 Kit includes all documentation, source and compiled binaries for the benchmark.

The Provider Module refers to an implementation of the org.spec.perfharness.jms.providers.JMSProvider interface. A default, provider-independent implementation that uses JNDI is included in the SPECjms2007 Kit. A product-specific Provider Module may be required to run the benchmark on some JMS products.

The JMS Server or Server refers to the pieces of hardware and software that provide the JMS facilities to JMS Clients. It may be comprised of multiple hardware and/or software components and is viewed as a single logical entity by JMS Clients. The Server also includes all the stable storage for persistence as required by the JMS Specification.

The JMS Clients or Clients refer to Java application components that use the JMS API. The SPECjms2007 benchmark is a collection of Clients in addition to other components required for benchmark operation.

Destination refers to a JMS destination, which is either a queue or a topic.

Location refers to a single logical entity in the benchmark application scenario. The four entities defined in the SPECjms2007 benchmark are Supermarket (SM), Supplier (SP), Distribution Center (DC), and Headquarters (HQ).

A Topology is the configuration of Locations being used by a particular benchmark run. The SPECjms2007 benchmark has two controlled topologies, the Vertical Topology and the Horizontal Topology, that are used for Result Submissions. The Freeform Topology allows the user complete control over the benchmark configuration.

The BASE parameter is the fundamental measure of performance in the SPECjms2007 benchmark. It represents the throughput performance of a benchmark run in a particular Topology. Each benchmark Topology uses a different metric to report the result and the value of the metric, which is a measure of the SUT performance in that Topology, is the BASE parameter. In the Vertical Topology, the metric is called SPECjms2007@Vertical and in the Horizontal Topology, the metric is called SPECjms2007@Horizontal. In other words, the BASE parameter is the result achieved for a particular Topology. The BASE parameter can not be compared across the different Topologies, i.e. a result achieved in the Horizontal Topology can not be compared with a result achieved in the Vertical Topology.

An Interaction is a defined flow of messages between one or more Locations. The Interaction is a complete message exchange that accomplishes a business operation. SPECjms2007 defines seven (7) Interactions of varying complexity and length.

A Flow Step is a single step of an Interaction. An Interaction therefore is comprised of multiple Flow Steps.

An Event Handler (EH) is a Java Thread that performs the messaging logic of a single Flow Step. A Flow Step may use multiple Event Handlers to accomplish all of its messaging as required by the benchmark. In relation to the SPECjms2007 benchmark, the Clients are the Event Handlers, which are the components in which all JMS operations are performed.

A Driver (DR) is an Event Handler that does not receive any JMS messages. Drivers are those Event Handlers that initiate Interactions by producing JMS messages. Although they are only the initiators in the JMS message flow and not JMS message receivers, they are included collectively under Event Handlers as they are modelling the handling of other, non-JMS business events (e.g. RFID events) in the benchmark application scenario.

The System Under Test (SUT) is comprised of all hardware and software components that are being tested. The SUT includes all the Server machines and Client machines as well as all hardware and software needed by the Server and Clients.

An Agent is a collection of Event Handlers (EHs which includes DRs) that are associated with a Location. A Location can be represented by multiple Agents.

An AgentJVM is a collection of Agents that run in a single Java Virtual Machine (JVM).

The Controller (also referred to as the ControlDriver) is the benchmark component that drives the SPECjms2007 benchmark. There is exactly one Controller when the benchmark is run. The Controller reads in all of the configuration, instantiates the Topology (the Locations to use and connections between them), monitors progress, coordinates phase changes and collects statistics from all the components.

A Satellite (also referred to as a SatelliteDriver) is the benchmark component that runs AgentJVMs and is controlled by the Controller.

The Framework is the collective term used for the Controller, Satellite and benchmark coordination classes.

A Node is a machine in the SUT. The four kinds of nodes are, server nodes, client nodes, db nodes, and other nodes. A server-node is any node that runs the JMS Provider's server software. The client-nodes run the benchmark components and must each run exactly one Satellite. If the JMS product is configured with a database which runs on machines that are separate from the ones that the JMS server software runs on, then these machines are referred to as database nodes. Any other machines that are needed for the JMS product operation that are not covered by the three nodes described above are included in other nodes.

The Delivery Time refers to the elapsed time measured between sending a specific message and that message being received.

Normal Operation refers to any time the product is running, or could reasonably be expected to run, without failure.

A Typical Failure is defined as a failure of an individual element (software or hardware) in the SUT. Some examples to qualify this include Operating System failure, interruption to electricity or networking or death of a single machine component (network, power, RAM, CPU, disk controller, or an individual disk, etc). It includes failure of the Server as well as Clients.

A Single-Point-of-Failure is defined as a single Typical Failure. It is not extended to cover simultaneous failures or larger scale destruction of resources.

Non-Volatile Storage refers to the mechanism by which persistent data is stored. Non-Volatile Storage must be online and immediately available for random access read/write. Archive storage (e.g. tape archives or other backups) does not qualify as it is not considered as online and immediately available.

The Measurement Period refers to length of time during which measurement of the performance of the SUT is made.

The Warmup Period refers to the period from the commencement of the benchmark run up to the start of the Measurement Period.

The Drain Period refers to the period from the end of the Measurement Period up to the end of the benchmark run.

The Final Version refers to the final version of a product that is made available to customers.

The Proposed Final Version refers to a version of a product, that while not a final version as defined above, is final enough to use to submit a benchmark result. Proposed final versions can include Beta versions of the product.

The General Availability (GA) date for a product is the date that customers can place an order for, or otherwise acquire the product.

The Run Result refers to the HTML file that is generated at the end of a benchmark run that indicates the pass/fail status of all the Interactions in the benchmark.

A Result Submission refers to a single JAR file containing a set of files describing a SPEC benchmark result that is submitted to SPEC for review and publication. This includes a Submission File, a Configuration Diagram and a Full Disclosure Archive.

The Submission File refers to an XML-based document providing detailed information on the SUT components and benchmark configuration, as well as some selected information from the benchmark report files generated when running SPECjms2007. The Submission File is created using the SPECjms2007 Reporter.

The Configuration Diagram refers to a diagram in common graphics format that depicts the topology and configuration of the SUT for a benchmark result.

The Full Disclosure Archive (FDA) refers to a soft-copy archive of all relevant information and configuration files needed for reproducing the benchmark result.

The Full Disclosure Report (FDR) refers to the complete report that is generated from the Submission File. The FDR is the format in which an official benchmark result is reported on the SPEC Web site.

The SPECjms2007 Reporter refers to a standalone utility provided as part of the SPECjms2007 Kit that is used to prepare a Result Submission. The SPECjms2007 Reporter generates the Submission File and gathers all information pertaining to the Result Submission into a directory to be packaged and submitted to SPEC for review and publication. The SPECjms2007 Reporter also generates a preview of the Full Disclosure Report to be verified by the submitter before submitting the result.

SPECjms2007 benchmark versions follow the numbering system A.B where

The major goal of the SPECjms2007 benchmark is to provide a standard workload evaluating the performance and scalability of JMS-based Message-Oriented Middleware platforms. Performance is recorded and compared using two standard metrics: SPECjms2007@Horizontal and SPECjms2007@Vertical. In addition, the benchmark provides a flexible framework for JMS performance investigation and analysis.

The SPECjms2007 Design Document includes a complete description of the application scenario and Java workload construction.

In short, the business-level application scenario (see SPECjms2007 Design Document) is implemented as a set of controlled JVMs. These are arranged into the Framework components, Controller and Satellites, (see Figure 1) which control the general distribution of the Agents (see Figure 2) which in turn control the actual JMS operations running in their Event Handler threads. A good comprehension of these can be gained simply by using Figures 1 and 2 to visualise the description of terms (Section 1.1). Note that (except for the Destination setup application) JMS operations only occur between Event Handlers and the JMS Server.

This section of the User's Guide describes the software and hardware environment required to run the workload. A more formal list of requirements is given in the Run Rules document.

Although SPECjms2007 can be run on a single machine for testing purposes, compliance with the SPECjms2007 Run and Reporting Rules requires that the JMS Clients be run on a machine other than the JMS Server. Therefore, a compliant hardware configuration must include a network and a minimum of two systems.

SPECjms2007 also has the concept of a Controller which orchestrates the running benchmark. This can be hosted on any machine, whether part of the SUT or not. All Clients must be capable of connecting via RMI to the Controller. It is also recommended that the Controller is on the same LAN as the Clients. A typical configuration is illustrated in Figure 1.

SPECjms2007 uses Java 5 constructs and class libraries. Note that manual

compilation must use -source 1.5.

Fundamentally, only Java and a JMS Server product is required. Users will need the set of JMS Client jars packaged with the JMS Server product. SPECjms2007 uses the JMS 1.1 API Specification. As is standard with JMS, it is assumed that products can be accessed and administered through JNDI. Facilities are present for vendors to use other mechanisms if they desire but this is an advanced topic.

Invocation is handled through Ant. A trimmed-down version is provided

in the /bin directory or it can be downloaded from http://ant.apache.org.

It is a requirement of the benchmark auditing that clocks are within 100ms of each other. It is expected that all machines involved in the test will synchronize clocks through the use of Network Time Protocol. In order to increase accuracy, it is recommended that a local machine is used as the NTP time server.

This section pulls together instructions from the rest of the User's Guide to help first-time users get SPECjms2007 going quickly with a minimal Horizontal Topology. There is a minimum of explanation so if any problems are encountered you will have to consult the further detail. It is expected that users have read the introduction before attempting to follow these instructions.

SPECjms2007 is an enterprise benchmark and is not intended to run on a single machine but the first user experience is likely to be on a single user workstation. The following instructions should be all that is needed provided you have the required software environment set up.

c:\specjms2007 or ~/specjms2007.org.spec.jms.files.vendor (in

file config/run.properties) to point to the relevant <vendor>.properties file

in the config directory.config/default.env includes your JMS Client

jars in the CLASSPATH entry and correctly configures the Java executable

settings for your AgentJVMs. Also make sure that the JMS Client jars

are on the system CLASSPATH and that java is accessible on the PATH.<vendor>.properties or

the default sample-vendor.properties you will need to

edit the file to point to the JMS Server resources. This probably

entails entering the correct JNDI InitialConnectionFactory parameters

which you will need to get from your product documentation.ant startController in one window and ant

startSatellite in another window (you may choose to run

the single ant startBoth but this gives confusing

output).output/00001. Try

looking at specjms-result.html for audit tests and in interaction.measurement.xml for

some greater detail.SPECjms2007, as with most benchmarks, needs to concern itself with the control, uniformity and reproducibility of the benchmark environment. The basic system for achieving this includes the following instructions.

lib directory

of each Node. This ensures you know exactly what libraries are being

used. config/default.env includes your JMS Client

jars in the CLASSPATH entry and correctly configures the Java executable

settings for your AgentJVMs. Also make sure that the JMS Client jars

are on the system CLASSPATH and that java is accessible on the PATH.ant startController on the Controller machine and ant

-Dcontroller.host=<name> startSatellite on the relevant

Satellite Nodes.The following instructions are for users of the general release version of SPECjms2007. There is also a development release which has certain extra requirements.

SPECjms2007 comes in two parts. All users require the main project, this provides them with a "generic JNDI" implementation. For several products there may be an additional Provider Module to install. This module will most likely exist to fix a minor incompatibility or simplify the process of setting up the required JMS Destinations. The SPECjms2007 Kit must be installed on all Nodes in the SUT including the machine where the Controller is run.

The SPECjms2007 Kit is supplied in a compressed archive that should be

extracted to a location of the user's choosing. Users wishing to make

use of the Eclipse programming IDE should

keep the main directory named specjms2007. The package contains

the relevant files to import directly into an Eclipse workspace provided

this name is retained.

It is expected all users will be using the ANT tool, a trimmed-down version

of which can be found in the /bin directory.

There are two choices when installing a Provider Module. The simplest

is to unpack it directly over the installation directory of the main

SPECjms2007 Kit. Alternatively, if you have the developer installation

SPECjms2007 Kit you must run the following two commands from the relevant

location under the vendor_specific subdirectory: ant

-f build-vendor.xml jar-vendor ant -f build-vendor.xml

install-vendor You will need to add lib/specjms2007.jar to

the CLASSPATH before running the above commands. In either case, it will

probably be required to copy any JMS Client jars into the /lib directory.

There is no "uninstall", so be careful what you do with the combined fileset

afterwards. Also be aware that it will overwrite files like default.env so

take a copy of the config directory if you have made changes

you want to merge.

The benchmark follows a simple structure, given below. Each Provider Module also follows this structure, enabling it to be copied directly over the base installation.

| Directory | Description |

|---|---|

/bin |

Helper scripts and platform executables (of which there may be none by default). |

/classes |

Compiled Java classes are stored here temporarily whilst

building specjms2007.jar. This directory is

not part of the CLASSPATH. |

/config |

The hierarchy of user, Topology and product-specific configuration files. |

/doc |

All benchmark documentation and Javadocs. |

/lib |

Required libraries (jar files) live here. Precise requirements differ between the Controller and Satellites. |

/output |

Test results are stored in sequentially-numbered directories

here. The number is stored in ~/.specjms/specjms.seq. |

/redistributable_sources |

Contains copies of the licenses that cover the freely-available and redistributable packages used in the SPECjms2007 Kit. |

/src |

Benchmark source code. |

/submission |

User-declared information detailing the SUT and benchmark configuration for a submittable run. |

/vendor_specific |

If present (only in internal developer release), this contains all product-specific Provider Modules. |

For full details on the package structure, please consult the Javadocs

in the /doc directory. The following is a diagram demonstrating

the interaction of the major packages which comprise this benchmark.

SPECjms2007 ships with a precompiled jar file but, providing the installation has been done correctly, compiling the project is a simple matter of running ant jar from the SPECjms2007 installation directory.

To build and install a Provider Module either unpack it directly over

the main project or add lib/specjms2007.jar to the CLASSPATH

and run ant -f build-vendor.xml jar-vendor ant

-f build-vendor.xml install-vendor from the relevant location

under the vendor_specific subdirectory:

Note that if building manually or from Eclipse, you must update

the jar file or invoked AgentJVMs will be using old code! For this reason

you should not add the classes directory to the CLASSPATH.

Comment: It is not allowed to submit results using any user-compiled version of the benchmark other than the version compiled and released by SPEC. The only component of the benchmark that may be compiled is the product-specific Provider Module. See Section 3.7 in the SPECjms2007 Run Rules for more information.

SPECjms2007 default locations will be saved the first time you invoke

Ant to <user_home>/.specjms/framework.properties.

You will not ordinarily need to edit these.

All configuration files reside in the /config directory.

Apart from default.env, files only need editing on the Controller

as the effective values are transferred over RMI to each Satellite.

| File | Description |

|---|---|

run.properties |

Defines global properties and points to further properties

files for the Topology and product configurations. These

files are combined together in the Controller. If a Provider

Module was installed, this file will need to be updated to

point to the respective <vendor>.properties file

rather than the default sample-vendor.properties. |

<vendor>.properties |

JNDI connection details for the product under test. Users need to create a new file for their own physical environment. |

freeform.properties |

Contains a complete set of properties that define the benchmark Topology and control how it is run. |

horizontal.properties |

Contains a complete set of properties that define a submittable Horizontal Topology. |

vertical.properties |

Contains a complete set of properties that define a submittable Vertical Topology. |

default.env |

Environment properties that will be used in the invocation

of AgentJVMs. It is here that the JRE to be used for running

the workload can be specified. If a Provider Module was installed,

it may have overwritten default.env to point

at the correct Client jars, assuming they are in the /lib directory.

Although this is not a compulsory way of including them,

SPECjms2007 will automatically use them if they are copied

into this location. If not, then the CLASSPATH should be

updated to point to their location. The system CLASSPATH

can be included as "${CLASSPATH}".Note: This file is not automatically copied from the Controller -- each Satellite Node must have an identically-named file (although the contents may be tailored to each machine). |

At its most basic level all the user needs to configure is whether to run a Horizontal or Vertical testrun.properties:org.spec.jms.files.topology = horizontal.properties and the scaling value in the relevant Topology-specific configuration file, e.g. horizontal.properties:org.spec.jms.horizontal.BASE = 10

Properties can actually be specified in any of the files (or, more usefully, overridden) as they are flattened in the Framework. The priority of ordering is :

Each of the above files should contain plenty of documentation on what its properties are for but the following is a complete listing of configuration parameters in the order in which they appear in their respective files.

| Property | Default |

|---|---|

| org.spec.specj.security.policy | security/driver.policy |

This location is specified relative to the config directory.

Users should never need to change this but the specified

file must be identical on all Nodes. |

|

| org.spec.rmi.timeout | 60 |

| RMI timeout in seconds for all connection attempts in the Framework. | |

| org.spec.rmi.poll | 2000 |

| RMI connection attempts in the Framework occur with this frequency (in milliseconds). | |

| org.spec.specj.verbosity | INFO |

Maximum verbosity level

for logging. This must be parsable by java.util.logging.Level.parse().

Common values are (INFO|FINE|ALL) |

|

| org.spec.specj.logToFile | true |

| If true, output from the Controller and Satellites will be duplicated to a file in the output directory. Note that remote Satellite files are not automatically collected by the Controller. | |

| org.spec.specj.logAgentsToFile | false |

| Used by the Satellites to direct Agent output to individual files as well as their own output. Note that these files are not automatically collected by the Controller. | |

| org.spec.specj.copyConfigToOutput | true |

| Copies the active config directory into the results directory for this run. This will include any config files not being used in this run. | |

org.spec.jms.isolateAgents org.spec.jms.isolateAgents |

true |

| Isolate multiple Agents within a JVM using a non-cascading ClassLoader. This avoids JVM-level optimisations spanning the logical boundary between Agents. | |

org.spec.jms.stats.histogram.upperbound org.spec.jms.stats.histogram.upperbound |

10000 |

| Delivery times for individual messages are recorded into a histogram per Destination. This is the maximum time (milliseconds) which that histogram supports. | |

org.spec.jms.stats.histogram.granularity org.spec.jms.stats.histogram.granularity |

100 |

| Delivery times for individual messages are recorded into a histogram per Destination. This is the granularity (milliseconds) which that histogram supports. | |

org.spec.jms.stats.sampleTime org.spec.jms.stats.sampleTime |

60 |

| Throughput measurements for individual Destinations are collected into samples of this length (seconds). It is not recommended that this is less than 10. Note: This measurement facility is not used by the formal measurement process. | |

org.spec.jms.stats.trimInitialSamples org.spec.jms.stats.trimInitialSamples |

1 |

| When summarising throughput histories, this many samples are thrown away from the beginning of a sequence. It is not recommended that this is less than 1. | |

org.spec.jms.stats.trimFinalSamples org.spec.jms.stats.trimFinalSamples |

1 |

| When summarising throughput histories, this many samples are thrown away from the end of a sequence. It is not recommended that this is less than 1. | |

org.spec.time.heartbeatInterval org.spec.time.heartbeatInterval |

30000 |

| Length of time (milliseconds) between checks on Agent status. Set to zero to disable. It is not recommended to go below 1000. | |

| org.spec.specj.trimExceptions | true |

| The Controller will attempt to simplify RemoteExceptions happening on Agents. If you suspect RMI itself is part of the problem you most certainly need to set this to false. | |

org.spec.specj.clockDifference org.spec.specj.clockDifference |

100 |

| If any Satellite clocks is further apart from the Controller clock than this threshold a warning is raised. | |

| org.spec.specj.threadpool.limit | 20 |

| The number of parallel threads the Controller's ExecutorService uses to communicate with Agents when collecting results or signalling state changes. | |

| org.spec.jms.report.* | |

| These control the results files which are generated. See Section 5 for more details. | |

org.spec.jms.time.realtimeReportingPeriod org.spec.jms.time.realtimeReportingPeriod |

300 |

| The period (seconds) at which statistics are reported by the Controller during a run. A value of zero will disable this feature. | |

| org.spec.jms.time.warmup | 600 |

| The length (seconds) of the Warmup Period. Please consult the Run Rules for further details on this. | |

| org.spec.jms.time.measurement | 1800 |

| The length (seconds) of the Measurement Period. Please consult the Run Rules for further details on this. | |

| org.spec.jms.time.drain | 60 |

| The length (seconds) of the Drain Period. | |

| org.spec.jms.files.topology | horizontal.properties |

The file (relative to the config directory)

in which the desired Topology is configured. |

|

| org.spec.jms.files.vendor | sample-vendor.properties |

The file (relative to the config directory)

in which the product specifics are configured. |

|

| org.spec.specj.environment | default.env |

The file (relative to the config directory)

in which the system environment for JVMs on this Node is

configured. |

|

The following properties are relevant for all configurations. In other topologies many of the freeform values will be locked into fixed values or ratios. The user remains free to experiment with overriding these at the expense of a non-submittable benchmark run. Such properties are denoted with a padlock icon.

| Property | Default |

|---|---|

org.spec.jms.configuration org.spec.jms.configuration |

|

| The type of Topology being presented by this configuration. Options are horizontal, vertical or freeform. | |

| org.spec.jms.*.nodes | |

| Agents will be assigned to their respective list of Nodes in a round-robin allocation. More detail on this is given in Section 5.3. | |

org.spec.jms.*.count org.spec.jms.*.count |

1 |

| The number of Locations in this scenario. Changing this in any Topology other than Freeform will invalidate the test results. | |

| org.spec.jms.*.agents_per_location | 1 |

| The number of cloned Agents which represent a single Location. More detail on this is given in Section 5.3. | |

org.spec.jms.debug.*.exclude_agents org.spec.jms.debug.*.exclude_agents |

|

| A list of Agents (e.g. "4-1") that will NOT run during the benchmark. | |

| org.spec.jms.*.jvms_per_node | 1 |

| Limits the number of Client JVMs running on a single Node. Agents will be round-robin distributed amongst these JVMs. More detail on this is given in Section 5.3. | |

| org.spec.jms.*.jvmOptions | |

| This allows Java tuning to be applied per AgentJVM. The principle usage is expected to be the use of tailored heap sizes. | |

| org.spec.jms.*.max_connections_per_agent | 1 |

| The number of javax.jms.Connection objects shared between Event Handlers in a single Agent. | |

| org.spec.jms.dc.sessions_share_connection | true |

| Defines if DC Event Handlers with two javax.jms.Session objects will use the same connection. If false, the sessions are allocated connections independently (but may still get the same one from the shared pool). | |

| org.spec.jms.*EH.count | 1 |

| The number of Event Handlers of the given type present in each Agent. | |

| org.spec.jms.*DR.count | 1 |

| The number of Drivers of the given type present in each Agent (as a reminder, a Driver is a type of Event Handler thread that initiates an Interaction). The prescribed Interaction rate will be distributed across the Drivers. | |

org.spec.jms.*DR.rate org.spec.jms.*DR.rate |

1 |

| The rate at which a specific Interaction is run. This rate is per Location and is spread across all relevant Event Handlers. | |

org.spec.jms.*DR.msg.count org.spec.jms.*DR.msg.count |

0 |

| The fixed number of messages a Driver will insert into the system. This is mostly used for debugging. A value of 0 disables this feature. | |

org.spec.jms.*DR.cache.size org.spec.jms.*DR.cache.size |

100 |

| The number of cache entries available for message creation. | |

org.spec.jms.*.msg.prob.* org.spec.jms.*.msg.prob.* |

|

| Message sizes for each Interaction are randomly selected from three possibilities. These values define the probability of selecting a given size. | |

org.spec.jms.*.msg.size.* org.spec.jms.*.msg.size.* |

|

| Message sizes for each Interaction are randomly selected from three possibilities. These values define the size of each selection. Note that sizes are not given directly (e.g. KB) but are specific to the type of message. | |

org.spec.jms.sm.cashdesks_per_sm org.spec.jms.sm.cashdesks_per_sm |

5 |

Number of cash desks per

SM. Used for the creation of the statInfoSM message. |

|

| org.spec.jms.*.cf | |

JNDI connection factory names for specific Event

Handlers. If specified, these will override the default given

in the <vendor>.properties file. More

detail on this is given in Section 5.3. |

|

org.spec.jms.ProductFamily_count org.spec.jms.ProductFamily_count |

4 |

| Specifies how many topics exist for the product families. This has particular effect on Interaction 2. | |

org.spec.jms.ProductFamilies_per_SP_percent org.spec.jms.ProductFamilies_per_SP_percent |

100 |

Defines the percentage of product families a

Supplier Location offers (and therefore the number of topics

it subscribes to). This parameter is also used for vertical

scaling. This parameter has a strong relation to the parameter ProductFamilies_per_SP,

which is used for horizontal scaling (see below). |

|

org.spec.jms.Interaction7.autoAck org.spec.jms.Interaction7.autoAck |

true |

| Uses AUTO_ACKNOWLEDGE for subscribers in Interaction 7. The alternative is DUPS_OK. CLIENT_ACKNOWLEDGE is not supported. | |

org.spec.jms.crcFrequency org.spec.jms.crcFrequency |

1000 |

| How often (in messages) each JMS Producer will invoke an CRC (Adler32) of the transmitted bytes. An attempt is made to spread the trigger time of individual Event Handlers evenly across the system. | |

The following properties are specific to Horizontal topologies. Freeform properties denoted above as fixed will have values specific to Horizontal Topology. Changing any of them results in a non-submittable benchmark run.

| Property | Default |

|---|---|

| org.spec.jms.horizontal.BASE | 5 |

| This is the BASE scaling factor for horizontal tests. Although the value can be lower, the transaction mix will be incorrect for values under 5. It is currently synonymous with org.spec.jms.sm.count. | |

SM.count_X SM.count_X |

1 |

| The number of Supermarket Locations relative to the horizontal scale. | |

SP.count_X SP.count_X |

0.4 |

| The number of Supplier Locations relative to the horizontal scale. | |

HQ.count_X HQ.count_X |

0.1 |

| The number of Headquarters instances relative to the horizontal scale. | |

SMs_per_DC SMs_per_DC |

5 |

| The number of Supermarkets served by a single Distribution Centre. The number of DCs is calculated from this value. | |

ProductFamily_count_X ProductFamily_count_X |

1 |

| The number of unique product families in the system relative to the horizontal scale. | |

ProductFamilies_per_SP ProductFamilies_per_SP |

5 |

| This property defines to how many product families a Supplier subscribes (as a fixed value). | |

The following properties are specific to Vertical topologies. Freeform properties denoted above as fixed will have values specific to Vertical Topology. Changing any of them results in a non-submittable benchmark run.

| Property | Default |

|---|---|

| org.spec.jms.vertical.BASE | 1 |

| This is the BASE scaling factor for vertical tests. | |

org.spec.jms.sm.count org.spec.jms.sm.count |

10 |

| The number of Supermarkets. | |

org.spec.jms.dc.count org.spec.jms.dc.count |

2 |

| The number of Distribution Centers. | |

org.spec.jms.sp.count org.spec.jms.sp.count |

5 |

| The number of Suppliers. | |

org.spec.jms.hq.count org.spec.jms.hq.count |

2 |

| The number of instances of the Headquarters. | |

org.spec.jms.*.rate_X org.spec.jms.*.rate_X |

|

| The Interaction rate relative to the vertical scale. | |

org.spec.jms.crcFrequency_X org.spec.jms.crcFrequency_X |

200 |

| How often (in messages) each JMS Producer will invoke a CRC (Adler32) of the transmitted bytes relative to the vertical scale. | |

Contains the settings to connect SPECjms2007 to a specific product and JMS Server. If other JNDI properties need to be set then this can be accomplished in the standard manner of including a jndi.properties file on the CLASSPATH.

| Property | Default |

|---|---|

| providerClass | JNDI |

| Provider Module may supply an alternative class here. Otherwise, leave this on the default. | |

| initialContextFactory | |

| The name of the product-specific class for implementing JNDI lookups. | |

| providerUrl | |

| The URL of the JNDI provider. The format of this is specific to the initialContextFactory selected above. | |

| connectionFactory | SPECjmsCF |

| The default connection factory to which all Event Handlers connect. | |

| jndiSecurityPrincipal | |

| A shortcut to setting the JNDI username if required. | |

| jndiSecurityCredentials | |

| A shortcut to setting the JNDI password if required. | |

Contains the environment variables passed to AgentJVMs. Users can add anything they require in here although the system environment (e.g. that of the Satellite) is automatically included.

| Property | Default |

|---|---|

| JAVA_HOME | |

| The installation directory of the JRE to be used by AgentJVMs. | |

| JVM_OPTIONS | |

| Options specific to JVMs on the current Node. | |

| JAVA | ${JAVA_HOME}/bin/java ${JVM_OPTIONS} |

| If set, this overrides explicit usage of the above two variables. It allows a mechanism to execute a script for example, rather than directly invoking Java. If unset, the benchmark will effectively combine the previous two variables together in the same manner as the default for JAVA. | |

| CLASSPATH | |

| If set, this overrides the system environment (although it can be included with ${CLASSPATH}). | |

There are some other low-level properties which may be of use when debugging.

These can be set in any of the configuration files but probably are best

placed in the <vendor>.properties file.

| Property | Default |

|---|---|

| wt | 120 |

| SPECWorkerThread start timeout (s). This controls the time to wait for an Event Handler to start (including connecting to all JMS resources). | |

| ss | 30 |

| AgentJVM statistics reporting period (s). Setting this to 0 will disable periodic reporting entirely. Note that this data (seen in the Satellite's output) is purely for visual feedback and is never used by the benchmark. It represents a smoothed version of the cumulative rate being achieved by all Event Handlers in an Agent. | |

| us | |

| JMS username given when creating Connections. | |

| pw | |

| JMS password given when creating Connections. | |

The default package for SPECjms2007 is designed such that it can run on any product provided the relevant Destinations are created for Agents to use. Also available for download are product-specific packages where a vendor has chosen to provide one. Reasons for them to do so may include:

To run SPECjms2007 it must be configured for the current environment and JMS Server. Configuring the benchmark properly requires understanding how the Agents and Framework operate, which is described below.

The SPECjms2007 scenario includes many Locations represented by many Event Handlers. In order to drive the JMS Server to its capacity, Event Handlers may well be distributed across many Nodes. The reusable Framework designed to control SPECjms2007 aims to co-ordinate these distributed activities without any inherent scalability limitations.

Section 1.1 briefly introduces the terminology used to identify many of the components of a benchmark run. The stages of a benchmark run are as follows (corresponding text can be observed in output from the Controller). All communication within the Framework is done via RMI.

The Controller component reads in all of the configuration and topological layout preferences given by the user. This will include items such as the number of different types of Location and lists of the Nodes across which they may be run. In the two submittable topologies, many of these values are either fixed or are automatically calculated based upon the scaling factor.

With this knowledge, the Controller instantiates the software components of the SUT. It begins this by starting an RMI server and connecting to a Satellite process on each Node machine identified as part of this test to give it specific instructions. In all places (throughout this benchmark) where lists are given, work is distributed equally using a simple round-robin algorithm.

The Satellite is a simple part of the Framework that knows how to build the correct environment to start the required JVM processes. It takes the Controller's configuration and starts the Agents relevant to that Node. Although each Agent is logically discrete from its peers, the Satellite will, based upon the user configuration, combine many Agents into a single AgentJVM for reasons of scalability. There is an architectural limit of one AgentJVM per class of Location (SP, SM, DC, HQ), meaning a minimum of four AgentJVMs in the SUT. Each Agent connects back to the Controller to signal their readiness.

The Controller signals all Agents to initialise their Event Handler threads and connect to the JMS resources they will be using (this includes both incoming and outgoing Destinations). Each Event Handler is implemented as a Java thread.

Load-generating threads (Drivers) ramp up their throughput from zero to their configured rate over the Warmup Period. This helps ensure the SUT is not swamped by an initial rush when many of its constituent elements may not yet be fully prepared.

The Agents are the only parts of the SUT which perform JMS operations (i.e. talk directly to the JMS Server).

The Measurement Period is also known as the steady-state period. All Agents are running at their configured workload and no changes are made. The Controller will periodically (thirty seconds by default) check there are no errors and may also collect periodic realtime performance statistics.

In order to make sure all produced messages have an opportunity to be consumed, the Controller signals Agents to pause their load-generating threads (Drivers). This period is not expected to be long in duration as a noticeable backlog in messages would invalidate audit requirements on throughput anyway.

Agents will terminate all Event Handlers but remain present themselves so that the Controller can collect final statistics.

Having collected statistics from all parties, the Controller begins post-processing them into different reports. This process can take some time where the duration is related to total the number of Event Handlers involved in the benchmark.

Before you can run the benchmark you must create the JMS Destinations (and matching JNDI entries) on the Server. Only the Destinations required will be created so make sure the configuration is correct before running the setup component. A useful tip is to configure a large distribution, run setup, then revert back foregoing the need to re-run setup as the benchmark size increases.

If the Provider Module provides the ability to create JMS Destinations then it can be invoked by running ant jms-setup

If you do not have a product-specific Provider Module then you need to:

run.properties:org.spec.specj.verbosity=ALLant jms-setup. This will not create any Destinations

but will print out the JNDI names that will be expected to exist.If the JMS product supports dynamic creation of Destinations then an explicit

setup step is not required. If the product supports dynamic creation

using JNDI nomenclature such as dynamicQueue/DC_OrderQ1,

this can be configured using org.spec.jms.jndi.template.queue=dynamicQueue/${__name__} Similar

properties exist for other JMS administered objects:

Each of these properties should be defined in the config/<vendor>.properties file,

as required.

Before the Controller is run, Ant will first run a helper application known as the pre-auditor. This simply checks JMS Destinations for existing messages which would invalidate a run (but might otherwise only be detected when the test is completed). If the audit fails for any reason then the benchmark is not started.

The pre-audit is run on the Controller node. In order to supply the correct

CLASSPATH to the pre-auditor it is necessary (on Windows) to update the

CLASSPATH environment variable to include the relevant product-specific

jars. On non-Windows environments the content of default.env is

included in the pre-audit CLASSPATH.

When configured, the benchmark is started by calling ant startController or ant startSatellite respectively on each Node (the JMS Client jars must be on the CLASSPATH). All invocations must be within 60 seconds of each other or the Framework will terminate.

To start the Framework JVMs manually you will need a command line like java org.spec.jms.framework.SPECjmsControlDriver or java org.spec.jms.framework.SPECjmsSatelliteDriverCertain Java properties can be specified with java -D<param>=<value> org.spec....

| Parameter | Description |

|---|---|

| node.name | If the Satellite does not report itself correctly (i.e. its own name does not match what the Controller expects from the configuration files) then this property can be used to force the name. |

| controller.host | This is the name (or IP address) of the node hosting the Controller. If missing, this is presumed to be the node name. |

| org.spec.specj.home.dir | This defaults to the current directory but can be used to invoke the benchmark from elsewhere. |

| org.spec.* | Any of the standard configuration file properties can be overridden by including them in the Java invocation. |

In order to make full use of the hardware and software, the following elements are key areas in which the benchmark can be changed (whilst still creating submittable results). See Section 6.3 to find out how observations of failing results will help indicate when you may wish to apply some of these.

It is the users responsibility to monitor (using -verbose:gc)

and increase (using -Xmx and -Xms) the Java

heap size of the Agents. These values can be added to the java invocation

specified in config/default.env. Values set in this file

are applied to all AgentJVMs on a given Node. It may be preferable to

apply different heap tuning to different classes of Agent based upon

their profiles. This can be achieved using, for example, org.spec.jms.sm.jvmOptions

= -Xmx256M -Xms256MIt is suggested that garbage collection occur

not more frequently than two seconds and that minimum and maximum values

are always equal.

SPECjms2007 Clients need RAM, CPU and network to operate efficiently. It is the users responsibility to ensure that there are no limitations in any of these resources.

Where Client hardware does provide limitations, the four org.spec.jms.*.nodes parameters

can be used to distribute Agents across many Client machines. Each property

takes a white-space-separated list of Node names. These need to be the

names Satellites use to identify themselves and will normally be the

DNS names. Agents are distributed across the Nodes in a round-robin algorithm

with the following features.

There are no limitations on the interrelation of the sets used for the four properties but the following should be kept in mind:

The default (and minimum) number of JVMs is one per class of Location

on each Node (giving a default maximum of four JVMs per Node). The number

of Agents configured for the Node will be distributed into those JVMs.

As a Topology scales horizontally this will become a limitation observable

by java.lang.OutOfMemoryError being thrown. See the previous tip for

more details on controlling Java heap. These values can be increased

through the org.spec.jms.*.jvms_per_node properties but

care should be taken that the total Java heap allocated is not greater

than the free memory on each Node. Setting the value to 0 uses one JVM

for every Agent.

Users can increase the number of Event Handlers on individual Interaction Flow Steps. For point-to-point receivers which cannot keep up with the volume of incoming messages this may provide a significant boost. For Interaction Driver classes, the throughput assigned will be shared across duplicate Event Handlers. Subscribing Event Handlers cannot be duplicated in this fashion as this would actually increase the system load.

When testing with the default configuration in Vertical mode, the BASE should be set to 10 or higher. Setting the BASE to a value lower than 10 results in the message rate per Driver thread being too low to allow for enough messages to be generated during the run such that the empirical message inter-arrival time distribution is close enough to the target distribution. As a consequence of this, results would exhibit poor repeatability.

For both the Horizontal and Vertical Topology, as you scale the workload, if you over-configure the number of Drivers you might get a warning "Thread Sleep time = X sec > Y sec. It is likely that too many Driver threads have been configured for the defined BASE". If this warning is shown only occasionally during the run, it can be safely ignored, however, if it appears often, you should decrease the number of configured Drivers for the respective Interactions.

This is similar to using multiple Event Handlers. An Agent will be "cloned" and the copy could then (with careful assignment of Nodes and JVMs) be run elsewhere. Note that load configured for Driver threads is shared evenly and that subscribers are not cloned (but will be distributed amongst dopplegangers).

Each Agent has a number of JMS Connection objects to share amongst its

Event Handlers. Each Event Handler will open a new Session on the supplied

Connection. Increasing the number of org.spec.jms.*.max_connections_per_agent may

remove a bottleneck but bear in mind the JMS Specification does not guarantee

that a single Connection object actually equates to a single TCP/IP connection

- this is a product-specific behavior.

It is possible to specify the connection factory used by each individual Event Handler. This provides a location to apply tuning settings tailored to the specific operation of that thread. Please note that any such changes must be within the bounds of the Run Rules.

There are several methods to allow JVM profiling/tracing to occur at different granularities. Hopefully one of these will work with the tools available to you.

When holding an Agent in a frozen state, be aware that there are many timing conditions in the code that will complain (and potentially attempt to terminate the test) if certain actions are not completed in the correct time.

It is very simple to change the default Java invocation for all Client

JVMs. This might help you trace the entire SUT or allow an external profiler

to be targeted at particular JVMs (if you can identify them apart from

each other at this point). Just add the required lines to the default.env file

on relevant machines or the org.spec.jms.*.jvmOptions property.

SPECjms2007 allows the user to specify individual Agents to "exclude". These will not be started by the SatelliteDrivers but the ControlDriver will still pause and wait for that Agent to register itself. This gives the user a 60 second window to manually start the corresponding java process.

<topology>.properties file and add a

Location id (i.e. "1") to the relevant org.spec.jms.*.exclude-agents parameter.

Interactions can be disabled by setting the associated Driver rate (e.g. org.spec.jms.dc.DC_Interaction2DR.rate)

to zero. This will still create all associated Agents and Event Handlers

but will not deliver any messages into that part of the system. Other

methods to achieve the same goal may not work.

The Controller takes formal measurements at three points during the run. The first two, the beginning and end of the Measurement Period, are used to audit the messaging throughput. The final, at the end of the Drain Period, is used to audit the final message counts. The description of each period is given in Section 1.1.

The Controller may optionally take periodic measurements to display progress to users. Such samples are not part of the formal measurement process.

All results files are saved into a numbered directory such as /output/NNNNN.

Console output of the SPECjms2007 Framework is also copied into controller.txt and satellite-<nodename>.txt respectively.

Note that Satellite output is NOT copied back to the Controller but resides

in a correspondingly named directory on the Satellite Node.

The first file to inspect is the last one created which is specjms-result.html.

This is a simple translation and coloring of its XML source but it is

much more consumable when visually assessing results. See the next section

for details on the contents.

This contains the final audit checks and determines if the current configuration passed or failed. The key lines to look for (near the end of the file) are <test desc="All tests passed" result="false"/> <metric type="horizontal" unit="SPECjms2007@Horizontal" validConfig="false" value="25" /> which tells you all tests passed and, further, that the configuration is a submittable one (the Topology has not been customised). If the run failed an audit test, more details can be found further up in the XML structure. The following table describes each audit check. When taken together, they ensure the benchmark could not have failed or run unevenly over its run length.

| Audit test | Scope | Description |

|---|---|---|

| Input rate is within +-5% of configured value | Interaction | For each Interaction, the observed input rate is calculated as count/time for the Measurement Period. This must be within 5% of the value prescribed by the Topology. |

| Total message count is within +-5% of configured value | Interaction | Using a model of the scenario, the benchmark knows how many messages should be processed as part of each Interaction. The observed number of messages sent and received by all parties must be within 5% of this value. |

| Input rate distribution deviations do not exceed 20% | Interaction | The percentage of pacing misses (in Driver threads) the benchmark will allow. A miss is when the timing code found itself behind where it thought it should be. |

| 90th percentile of Delivery Times on or under 5000ms | Destination | Messages are timestamped when sent and received. The consequent Delivery Time is recorded as a histogram (for the Measurement Period only) in each Event Handler and the 90th percentile of this histogram must be on or under 5000ms. |

| All messages sent were received | Destination | Fails if the final results (taken after the Drain Period) show that not all sent messages were received. For publish-subscribe topics this means received by all subscribers. |

By default, the configuration directory used in the test is automatically

copied into the results directory. This is a low tech process and will

include all files whether actively used in this run or not. For this

reason it is prudent not to place any large files into the config directory.

Statistics are processed into many files. Selection of which files to

create is provided by the org.spec.jms.report.* properties.

It should be noted that certain files will not contain valid data for

the intermediate periods. The "final" statistics capture always contains

the full data set.

| Filename | Description |

|---|---|

detail.* |

These show the results from every single Event Handler. They may also optionally show the throughput history of each Destination where each entry in the array represents the (rounded!) rate for a 10 second time period. |

interaction.* |

This shows most of the data from detail.* but aggregates all identical Event Handler classes, hence providing a uniform view (over multiple configurations) of Interactions and their steps. |

jms.* |

These aggregate the Event Handler data based upon the JMS Destinations used but use an inferior technique to the Interaction summaries and are not always accurate (particularly for short runs). |

prediction.* |

These contain the internally calculated values for expected throughputs and message counts (i.e. they are not based upon observation). If the test passed audit, then the actual values should be within a few percent of predictions. |

runtime.* |

Contains the data samples taken by the runtime reporter if it was enabled. |

These files are also multiplied by the period on which they are reporting:

| Filename | Description |

|---|---|

*.warmup |

Data from the Warmup Period only. |

*.measurement |

Data from the Measurement Period only. |

*.drain |

Data from the Drain Period only. |

*.final |

Data for the entire run (including Warmup Period and Drain Period). |

Many of the above combinations can be further refined to create XML or TXT files although not all possible combinations have been implemented.

When a test fails there are several pointers as to which part failed.

The first thing to check is to find out which of the audits in specjms-result.xml failed.

There is a often a cause and effect at play here -- the most specific

failure is the one which causes higher level audits to fail as well and

the best one to investigate.

<destination name="DC_OrderQ1" type="queue">

<test desc="90th percentile of Delivery Times on or under 5000ms" result="false"/>

<test desc="All messages sent were received" result="true"/>

</destination>

If Delivery Times were outside of the maximum 90th percentile then the Event Handler to which that audit result applies needs to be given more resources. This is both the most common and the easiest solution to deal with. Options are (in loose order of priority) to increase

If not all messages were received (this is measured after the Drain Period) then the Destination has a significant backlog. Nevertheless, the same advice is given as before. It is also suggested to increase the Drain Period to remove this error condition as, whilst not solving the cause, it means tests can be re-run quickly.

Where the Interaction input rate has not kept up but other parts of the same Interaction pass, it is expected that system latency is simply too high and the benchmark has reached a natural limit (CPU or I/O). In products with in-built pacing, streamlining the receiving Event Handlers may improve the overall situation.

The most at-risk Event Handlers, even in a system that passes all tests,

can be identified by looking in interaction.measurement.xml at

the histogram of Delivery Times which looks like the below sample. Each

entry represents 100ms and each number is the count of delivery times

within that window. A well-running Event Handler will have values bunched

up close to 0 but poor performers can be seen by much more prolonged

or uneven distributions.

<deliveryTime avg="446.36" granularity="100" max="2964" percentile90="2200.0">

663, 104, 27, 21, 5, 14, 6, 5, 10, 10, 0, 0, 0, 5, 6, 14, 5, 10, 13,

7, 12, 17, 13, 17, 14, 4, 13, 14, 12, 4, 0, 0, 0, 0

</deliveryTime>

A tip here is to sort the Flow Steps within each Interaction by their average Delivery Time to deliver a list of performance critical areas.

A SPECjms2007 Result Submission is a single jar file containing the following components:

The intent is that using the above information it must be possible to reproduce the benchmark result given the equivalent hardware, software, and configuration.

SPECjms2007 includes a utility called SPECjms2007 Reporter for preparing an official benchmark Result Submission. The SPECjms2007 Reporter performs two functions:

The SPECjms2007 Reporter receives as input:

submission/submission.xml that includes

detailed information on the SUT components and benchmark configuration

used to produce the result. output/NNNNN/ where NNNNN is

the run ID. submission/submission.jpg. submission/submission.jar. The submission/submission.xml file contains user-declared

information on all static elements of the SUT and the benchmark configuration.

This information is structured in four sections: benchmark-info, product-info, hardware-info and node-info.

The latter describe the benchmark run, software products, hardware systems

and system configurations, respectively. ID attributes are used to link

elements together by an XSLT transformation. The user is responsible

for fully and correctly completing these sections providing all relevant

information listed in Section

6 of the SPECjms2007

Run and Reporting Rules.

To run the SPECjms2007 Reporter change to the top-level directory of the SPECjms2007 Kit and type:

ant reporter -DrunOutputDir=<runOutputDir>

where <runOutputDir> is the directory containing the

benchmark report files generated when running the benchmark, normally output/NNNNN/ where NNNNN is

the run ID. A sample run output directory is provided under output/sample/.

The SPECjms2007 Reporter proceeds as follows:

submission/jms2007-submission/. submission/submission.xml file with selected

information from the benchmark report files into a single XML-based

Submission File and stores this file under submission/jms2007-submission/jms2007-submission.xml. submission/submission.jpg under submission/jms2007-submission/jms2007-submission.jpg. submission/submission.jar under submission/jms2007-submission/jms2007-submission.jar. submission/jms2007-submission.html submission/jms2007-submission.sub submission/jms2007-submission.png Note that the FDR is not included in the Result Submission directory since it will be automatically generated on the SPEC server after the result is submitted to SPEC. However, the submitter must verify that the FDR is generated correctly from the Submission File when preparing the Result Submission.

To submit a result to SPEC:

submission/submission.xml file

providing all relevant information listed in Section

6 of the SPECjms2007

Run and Reporting Rules. submission/submission.jpg.submission/submission.jar.submission/jms2007-submission/ directory

in a jar file jms2007-submission.jar using the following

command:jar -cfM jms2007-submission.jar -C submission/jms2007-submission/

. Comment: Note the use of the -M switch when packaging the jms2007-submission.jar file.

You must use this switch to make sure that no manifest file is included

in the archive as required by SPEC.

The submitted archive should have the following structure:

jms2007-submission.xml

jms2007-submission.jpg

jms2007-submission.jar

Every Result Submission goes through a minimum two-week review process, starting on a scheduled SPEC OSG Java sub-committee conference call. During the review, members of the committee may ask for additional information or clarification of the submission. Once the result has been reviewed and accepted by the committee, it is displayed on the SPEC web site at http://www.spec.org/.

The property run.properties:org.spec.specj.verbosity=ALL will

show extra detail from all parts of the benchmark. If any problems are

being faced, be sure to set maximum verbosity.

The occurrence of runtime errors in Event Handlers and Agents will filter back to the Controller but it will not always know what the root cause of the remote exception was. It should be emphasised that the output from the Satellites will contain the full stack traces and other information.

Cygwin users should be aware that you cannot currently have a $ sign in the SPECjms2007 installation path.

The benchmark uses this buffer time to better assure that all Event Handlers are running at full speed before the first formal measurement point is taken.

A common initial problem faced is due to incompatible versions of JAXP or Xerces on the CLASSPATH. SPECjms2007 is compiled against the Java 5 javax.xml API, older instances of the interfaces are not all compatible. Such problems will most commonly be seen as java.lang.NoSuchMethodError thrown in conjunction with package org.w3c.dom.

This probably means the Destinations were not drained properly in a previous run.

This is a limitation of your JVM's XML implementation. The simplest solution is to view the files with a web browser.

Setting arbitrary Event Handler counts to zero etc. is not yet fully supported in the results post-processing. It may also cause division by zero failures at runtime as the number of elements is used in some of the load distribution algorithms.

In order to maintain logical isolation between Agents in a single JVM, it is becomes impossible for the JMS Client code to share a JNI library. If your product needs to use JNI you must configure *.jvms_per_node=0 to give each Agent its own JVM.

Event handlers look up resources during their initialization. In cases where the lookup is very slow or when large numbers of resources need to be looked up, an Agent may be unable to generate the required heartbeats in a timely fashion. In such an event, the Controller shuts down all the agents. However, currently the Controller does not abort the run as expected but waits in an endless loop.

Diagnostics: The following error will be printed to the controller.txt file - Waiting for 18 DCAgent to register over RMI for 10 minutes (polling every 10.000 seconds)

[java] Heartbeat failed for an agent.

[java] Unexpected exception.

[java] org.spec.specj.SPECException: Received asynchronous request for immediate shutdown

...

[java] RunLevel[Starting Agents] stopped after 59 seconds

Potential Solution: Increase the heartbeat timeout by increasing the value

of org.spec.rmi.timeout in the config/run.properties file.

When running with a Vertical Topology and a low BASE you may experience issues with input rates not being reached and instability. This is because setting a BASE of 10 or less can result in the message rate per Driver thread being too low to allow for enough messages to be generated during the run such that the empirical message inter-arrival time distribution is close enough to the target distribution.

Increasing the BASE will raise the message rates of the Driver threads and this will help overcome issues relating to instability and failed input rates.