SPECstorage™ Solution 2020_swbuild Result

Copyright © 2016-2025 Standard Performance Evaluation Corporation

|

SPECstorage™ Solution 2020_swbuild ResultCopyright © 2016-2025 Standard Performance Evaluation Corporation |

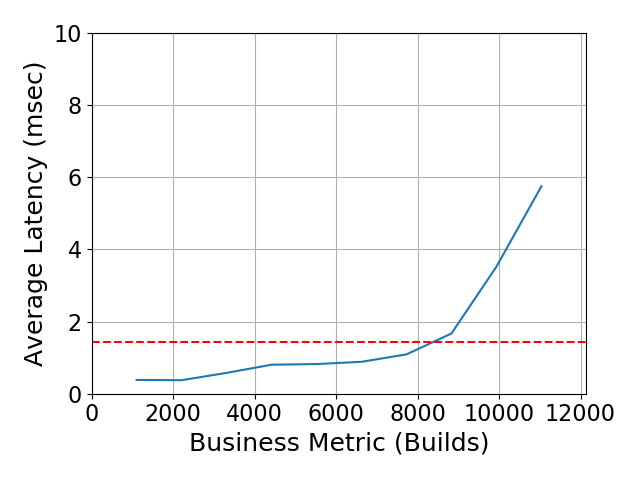

| NetApp Inc. | SPECstorage Solution 2020_swbuild = 11040 Builds |

|---|---|

| NetApp 8-node AFF A90 with FlexGroup | Overall Response Time = 1.42 msec |

|

|

| NetApp 8-node AFF A90 with FlexGroup | |

|---|---|

| Tested by | NetApp Inc. | Hardware Available | June 2024 | Software Available | March 2025 | Date Tested | March 2025 | License Number | 33 | Licensee Locations | Sunnyvale, CA USA |

Designed and built for customers seeking a storage solution for the high

demands of enterprise applications, the NetApp high-end flagship all-flash AFF

A90 delivers unrivaled performance, superior resilience, and best-in-class data

management across the hybrid cloud. With an end-to-end NVMe architecture

supporting the latest NVMe SSDs, and both NVMe/FC and NVMe/TCP network

protocols, it provides over 27% performance increase over its predecessor with

ultra-low latency. Powered by ONTAP data management software, it supports

non-disruptive scale-out to a cluster of 24 nodes.

ONTAP is designed

for massive scaling in a single namespace to over 20PB with over 400 billion

files while evenly spreading the performance across the cluster. This makes the

AFF A90 a great system for engineering and design applications as well as

DevOps. It is particularly well-suited for chip development and software builds

that are typically high file-count environments with high data and meta-data

traffic.

| Item No | Qty | Type | Vendor | Model/Name | Description |

|---|---|---|---|---|---|

| 1 | 4 | Storage System | NetApp | AFF A90 Flash System (HA Pair, Active-Active Dual Controller) | A single NetApp AFF A90 system is a chassis with 2 controllers. A set of 2 controllers comprises a High-Availability (HA) Pair. The words "controller" and "node" are used interchangeably in this document. One internal FS4483 disk shelf is direct-connected to the AFF A90 controllers, with 48 SSDs per disk shelf. Each AFF A90 HA Pair includes 2048GB of ECC memory, 128GB of NVRAM, 18 PCIe expansion slots and a set of included I/O ports: Included CoreBundle, Data Protection Bundle and Security and Compliance bundle which includes All Protocols, SnapRestore, SnapMirror, FlexClone, Autonomous Ransomware Protection, SnapCenter and SnapLock. Only the NFS protocol license is active in the test which is available in the Core Bundle. |

| 2 | 16 | Network Interface Card | NetApp | 2-Port 100GbE X50130A | 1 card in slot 1 and 1 card in slot 7 of each controller; 4 cards per HA pair; used for cluster connections. |

| 3 | 16 | Network Interface Card | NetApp | 2-Port 100GbE X50131A | 1 card in slot 6 and 1 card in slot 11 of each controller; 4 cards per HA pair; used for data connections to clients as part of 4x100 GbE. |

| 4 | 4 | Internal Disk Shelf | NetApp | FS4483 (48-SSD Disk Shelf) | Disk shelf with capacity to hold up to 48 x 2.5" drives. |

| 5 | 192 | Solid-State Drive | NetApp | 3.8TB NVMe SSD X4011A | NVMe Solid-State Drives (NVMe SSDs) installed in FS4483 disk shelf, 48 per shelf |

| 6 | 1 | Network Interface Card | Mellanox Technologies | ConnectX-5 MCX516A-CCAT | 2-port 100 GbE NIC, one installed per Lenovo SR650 V2 client. lspci output: Mellanox Technologies MT27800 Family [ConnectX-5] |

| 7 | 24 | Network Interface Card | Mellanox Technologies | ConnectX-6 CX653106A-ECAT | 2-port 100 GbE NIC, one installed per Lenovo SR650 V3 client. lspci output: Mellanox Technologies MT28908 Family [ConnectX-6] |

| 8 | 4 | Switch | Cisco | Cisco Nexus 9336C-FX2 | Used for Ethernet data connections between clients and storage systems. Only the ports used for this test are listed in this report. See the 'Transport Configuration - Physical' section for connectivity details. |

| 9 | 2 | Switch | Cisco | Cisco Nexus 9336C-FX2 | Used for Ethernet connections of AFF A90 storage cluster network. Only the ports used for this test are listed in this report. See the 'Transport Configuration - Physical' section for connectivity details. |

| 10 | 1 | Client | Lenovo | Lenovo ThinkSystem SR650 V2 | Lenovo ThinkSystem SR650 V2 client. System Board machine type is 7Z73CTO1WW, PCIe Riser part number R2SH13N01D7. The client also contains 2 Intel Xeon Gold 6330 CPU @ 2.00GHz with 28 cores, 8 DDR4 3200MHz 128GB DIMMs, 240GB M.2 SATA SSD part number SSS7A23276, and a 240G M.2 SATA SSD part number SSDSCKJB240G7. It is used as Prime Client. |

| 11 | 24 | Client | Lenovo | Lenovo ThinkSystem SR650 V3 | Lenovo ThinkSystem SR650 V3 clients. System Board machine type is 7D76CTO1WW, PCIe Riser part number SC57A86662. Each client also contains 2 Intel Xeon Gold 5420+ CPU @ 2.00GHz with 28 cores, 32 DDR5 3200MHz 32GB DIMMs, 240GB M.2 SATA SSD part number ER3-GD240. All 24 SR650 V3 clients are used to generate the workload. |

| Item No | Component | Type | Name and Version | Description |

|---|---|---|---|---|

| 1 | Linux | Operating System | RHEL 9.2 (Kernel 6.5.0-rc2+) | Operating System (OS) for the 24 clients and Prime. |

| 2 | ONTAP | Storage OS | 9.16.1P2 | Storage Operating System |

| 3 | Data Switch | Operating System | 9.3(7a) | Cisco switch NX-OS (system software) |

| Storage | Parameter Name | Value | Description |

|---|---|---|

| MTU | 9000 | Jumbo Frames configured for data ports |

Data network was set up with MTU of 9000.

| Clients | Parameter Name | Value | Description |

|---|---|---|

| rsize,wsize | 65536 | NFS mount options for data block size |

| protocol | tcp | NFS mount options for protocol |

| nfsvers | 3 | NFS mount options for NFS version |

| nofile | 102400 | Maximum number of open files per user |

| nproc | 10240 | Maximum number of processes per user |

| sunrpc.tcp_slot_table_entries | 64 | sets the number of (TCP) RPC entries to pre-allocate for in-flight RPC requests |

| net.core.wmem_max | 16777216 | Maximum socket send buffer size |

| net.core.wmem_default | 16777216 | Default setting in bytes of the socket send buffer |

| net.core.rmem_max | 16777216 | Maximum socket receive buffer size |

| net.core.rmem_default | 16777216 | Default setting in bytes of the socket receive buffer |

| net.ipv4.tcp_rmem | 1048576 8388608 33554432 | Minimum, default and maximum size of the TCP receive buffer |

| net.ipv4.tcp_wmem | 1048576 8388608 33554432 | Minimum, default and maximum size of the TCP send buffer |

| net.core.optmem_max | 4194304 | Maximum ancillary buffer size allowed per socket |

| net.core.somaxconn | 65535 | Maximum tcp backlog an application can request |

| net.ipv4.tcp_mem | 4096 89600 8388608 | Maximum memory in 4096-byte pages across all TCP applications. Contains minimum, pressure and maximum. |

| net.ipv4.tcp_window_scaling | 1 | Enables TCP window scaling |

| net.ipv4.tcp_timestamps | 0 | Turn off timestamps to reduce performance spikes related to timestamp generation |

| net.ipv4.tcp_no_metrics_save | 1 | Prevent TCP from caching connection metrics on closing connections |

| net.ipv4.route.flush | 1 | Flush the routing cache |

| net.ipv4.tcp_low_latency | 1 | Allows TCP to make decisions to prefer lower latency instead of maximizing network throughput |

| net.ipv4.ip_local_port_range | 1024 65000 | Defines the local port range that is used by TCP and UDP traffic to choose the local port. |

| net.ipv4.tcp_slow_start_after_idle | 0 | Congestion window will not be timed out after an idle period |

| net.core.netdev_max_backlog | 300000 | Sets maximum number of packets, queued on the input side, when the interface receives packets faster than kernel can process |

| net.ipv4.tcp_sack | 0 | Disable TCP selective acknowledgements |

| net.ipv4.tcp_dsack | 0 | Disable duplicate SACKs |

| net.ipv4.tcp_fack | 0 | Disable forward acknowledgement |

| vm.dirty_expire_centisecs | 30000 | Defines when dirty data is old enough to be eligible for writeout by the kernel flusher threads. Unit is 100ths of a second. |

| vm.dirty_writeback_centisecs | 30000 | Defines a time interval between periodic wake-ups of the kernel threads responsible for writing dirty data to hard-disk. |

| vm.swappiness | 0 | A tunable kernel parameter that controls how much the kernel favors swap over RAM. |

| vm.vfs_cache_pressure | 0 | Controls the tendency of the kernel to reclaim the memory which is used for caching of directory and inode objects. |

| vm.dirty_ratio | 10 | A kernel parameter that defines the percentage of system memory that can be filled with dirty pages before processes are forced to write out their dirty data to disk, preventing excessive memory consumption and potential I/O issues. |

| vm.dirty_background_ratio | 5 | Controls the percentage of system memory that, when dirty, triggers the background writeback daemon to start writing data to disk |

| Init_rate_speed | 50 | The default value is 0.0, indicating no throttling. If set to a value x.y, dataset initialization is throttled to x.y MB/sec per instance. This setting is useful for both internal and publication runs, as it helps achieve the correct levels of deduplication and compression. Throttling may be necessary on systems that disable compression and deduplication under heavy write workloads during dataset initialization, ensuring that data is properly compressed or deduplicated. |

| KEEPALIVE | 600 | This parameter is passed by SM2020 to the netmist prime and subsequently to the child processes. It sets the frequency of keep-alive messages between the components in the benchmark, specifically from the child to NM to Prime. Its sole purpose is to terminate the benchmark if it detects that the distributed system is hung. |

Tuned the necessary client parameters as shown above, for communication between

clients and storage controllers over Ethernet to optimize data transfer and

minimize overhead.

The second M.2 SSD in each client was configured as

a dedicated swap space of 224GB.

None

| Item No | Description | Data Protection | Stable Storage | Qty |

|---|---|---|---|---|

| 1 | 3.8TB NVMe SSDs used for data and storage operating system; used to build three RAID-DP RAID groups per storage controller node in the cluster | RAID-DP | Yes | 192 |

| 2 | 3.8TB NVMe M.2 device, 1 per controller; used as boot media | none | Yes | 8 |

| Number of Filesystems | 1 | Total Capacity | 576TiB | Filesystem Type | NetApp FlexGroup |

|---|

The single FlexGroup utilized all data volumes from the aggregates across all nodes. To accommodate the high number of files required by the benchmark, the default inode density of the FlexGroup was adjusted immediately after creation using the 'volume modify' option '-files-set-maximum true'. Additionally, the atime update parameter was disabled on the FlexGroup.

The storage configuration consisted of 4 AFF A90 HA pairs (8 controller nodes

total). The two controllers in a HA pair are connected in a SFO (storage

failover) configuration. Together, all 8 controllers (configured as an HA pair)

comprise the tested AFF A90 HA cluster. Stated in the reverse, the tested AFF

A90 HA cluster consists of 4 HA Pairs, each of which consists of 2 controllers

(also referred to as nodes).

Each storage controller was connected to

its own and partner's NVMe drives in HA configuration.

All NVMe SSDs

were in active use during the test (aside from 1 spare SSD per shelf). In

addition to the factory configured RAID Group housing its root aggregate, each

storage controller was configured with two 21+2 RAID-DP RAID Groups. There was

1 data aggregate on each node, each of which consumed one of the node's two

21+2 RAID-DP RAID Groups. This is 2 nodes per shelf x (21+2 RAID-DP + 1 spare)

x 4 shelves = 192 SSDs total. 16x volumes, holding benchmark data, were created

within each aggregate. "Root aggregates" hold ONTAP operating system related

files. Note that spare (unused) drive partitions are not included in the

"storage and filesystems" table because they held no data during the benchmark

execution.

A storage virtual machine or "SVM" was created on the

cluster, spanning all storage controller nodes. Within the SVM, a single

FlexGroup volume was created using the one data aggregate on each controller. A

FlexGroup volume is a scale-out NAS single-namespace container that provides

high performance along with automatic load distribution and scalability.

| Item No | Transport Type | Number of Ports Used | Notes |

|---|---|---|---|

| 1 | 100GbE | 57 | For the client-to-storage network, the AFF A90 Cluster used a total of 32x 100 GbE connections from storage to the switch, communicating via NFSv3 over TCP/IP to 24 clients(excluding the Prime client), via 1x 100GbE connection to the switch for each client. MTU=9000 was used for data switch ports. |

| 2 | 100GbE | 16 | The Cluster Interconnect network is connected via 100 GbE to a Cisco 9336C-FX2 switch, with 4 connections to each HA pair.. |

Each AFF A90 HA Pair used 8x 100 GbE ports for data transport connectivity to clients (through a Cisco 9336C-FX2 switch), Item 1 above. Each of the clients driving workload used 1x 100GbE ports for data transport. All clients connected to two switches for client connections. All storage data connections connected to two switches for storage connections. Storage and Client switches were connected by 2x 100Gbe ISL. All ports on the Item 1 network utilized MTU=9000. The Cluster Interconnect network, Item 2 above, also utilized MTU=9000.

| Item No | Switch Name | Switch Type | Total Port Count | Used Port Count | Notes |

|---|---|---|---|---|---|

| 1 | Cisco Nexus 9336C-FX2 | 100GbE | 36 | 18 | 12 client-side 100 GbE data connections, 1 port per client. 6 100Gbe ISL connections, 2 to the other Client switch and 2 to each Storage switches. Only the ports on the Cisco Nexus 9336C-FX2 used for the solution under test are included in the total port count. |

| 2 | Cisco Nexus 9336C-FX2 | 100GbE | 36 | 18 | 12 client-side 100 GbE data connections, 1 port per client. 6 100Gbe ISL connections, 2 to the other Client switch and 2 to each Storage switches. Only the ports on the Cisco Nexus 9336C-FX2 used for the solution under test are included in the total port count. |

| 3 | Cisco Nexus 9336C-FX2 | 100GbE | 36 | 14 | 16 storage-side 100 GbE data connections, 2 ports per controller. 6 100Gbe ISL connections, 4 to the 2 Client switches and 2 to the other Storage switch. Only the ports on the Cisco Nexus 9336C-FX2 used for the solution under test are included in the total port count. |

| 4 | Cisco Nexus 9336C-FX2 | 100GbE | 36 | 14 | 16 storage-side 100 GbE data connections, 2 ports per controller. 6 100Gbe ISL connections, 4 to the 2 Client switches and 2 to the other Storage switch. Only the ports on the Cisco Nexus 9336C-FX2 used for the solution under test are included in the total port count. |

| 5 | Cisco Nexus 9336C-FX2 | 100GbE | 36 | 28 | 1 ports per A90 node, for Cluster Interconnect. |

| 6 | Cisco Nexus 9336C-FX2 | 100GbE | 36 | 28 | 1 ports per A90 node, for Cluster Interconnect. |

| Item No | Qty | Type | Location | Description | Processing Function |

|---|---|---|---|---|---|

| 1 | 16 | CPU | Storage Controller | Intel Xeon Gold 6438N | NFS, TCP/IP, RAID and Storage Controller functions |

| 2 | 48 | CPU | Lenovo SR650 V3 Client | Intel Xeon Gold 5420+ | NFS Client, Linux OS |

| 3 | 2 | CPU | Lenovo SR650 V2 Client | Intel Xeon Gold 6330 | Prime Client, Linux OS |

Each of the 8 NetApp AFF A90 Storage Controllers contains 2 Intel Xeon Gold 6438N processors with 64 cores each; 2.00 GHz, hyperthreading enabled. Each of the 24 Lenovo SR650 V3 clients contains 2 Intel Xeon Gold 5420+ processors with 28 cores at 2.00GHz. All 25 clients have hyperthreading enabled.

| Description | Size in GiB | Number of Instances | Nonvolatile | Total GiB |

|---|---|---|---|---|

| Main Memory for NetApp AFF A90 HA Pair | 2048 | 4 | V | 8192 |

| NVDIMM (NVRAM) Memory for NetApp AFF A90 HA pair | 128 | 4 | NV | 512 |

| Memory for each of 25 clients | 1024 | 25 | V | 25600 | Grand Total Memory Gibibytes | 34304 |

Each storage controller has main memory that is used for the operating system and caching filesystem data. Each controller also has NVRAM; See "Stable Storage" for more information.

The AFF A90 utilizes non-volatile battery-backed memory (NVRAM) for write caching. When a file-modifying operation is processed by the filesystem (WAFL) it is written to system memory and journaled into a non-volatile memory region backed by the NVRAM. This memory region is often referred to as the WAFL NVLog (non-volatile log). The NVLog is mirrored between nodes in an HA pair and protects the filesystem from any SPOF (single-point-of-failure) until the data is de-staged to disk via a WAFL consistency point (CP). In the event of an abrupt failure, data which was committed to the NVLog but has not yet reached its final destination (disk) is read back from the NVLog and subsequently written to disk via a CP.

All clients accessed the FlexGroup from all available storage network

interfaces.

Unlike a general-purpose operating system, ONTAP does not

provide mechanisms for non-administrative users to run third-party code. Due to

this behavior, ONTAP is not affected by either the Spectre or Meltdown

vulnerabilities. The same is true of all ONTAP variants including both ONTAP

running on FAS/AFF hardware as well as virtualized ONTAP products such as ONTAP

Select and ONTAP Cloud. In addition, FAS/AFF BIOS firmware does not provide a

mechanism to run arbitrary code and thus is not susceptible to either the

Spectre or Meltdown attacks. More information is available from

https://security.netapp.com/advisory/ntap-20180104-0001/.

All client

systems used to perform the test were patched with Spectre or Meltdown patches

(CVE-2017-5754,CVE-2017-5753,CVE-2017-5715).

ONTAP Storage Efficiency techniques including inline compression and inline deduplication were enabled by default, and were active during this test. Standard data protection features, including background RAID and media error scrubbing, software validated RAID checksum, and double disk failure protection via double parity RAID (RAID-DP) were enabled during the test.

Please reference the configuration diagram. 24 clients were used to generate the workload; 1 client acted as Prime Client to control the 24 workload clients. Each client used one 100 GbE connection, through a Cisco Nexus 9336C-FX2 switch. Each storage HA pair had 8x 100 GbE connections to the data switch. The filesystem consisted of one ONTAP FlexGroup. The clients mounted the FlexGroup volume as an NFSv3 filesystem. The ONTAP cluster provided access to the FlexGroup volume on every 100 GbE port connected to the data switch (32 ports total). Each of the 8 cluster nodes had 1 Logical Interfaces (LIFs) on 4x 100GbE ports, for a total of 4 LIFs per node, for a total of 32 LIFs for the AFF A90 cluster. Each client created mount points across those 32 LIFs symmetrically.

There are 32 mounts per client. Example mount commands from one server are

shown below. /etc/fstab entry: 192.168.5.5:/vino_sfs2020_fg_1

/t/wle-vino-int-hi-05_e6a/vino_sfs2020_fg_1 nfs

hard,proto=tcp,vers=3,rsize=65536,wsize=65536 0 0

mount | grep sfs

192.168.5.5:/vino_sfs2020_fg_1 on /t/wle-vino-int-hi-05_e6a/vino_sfs2020_fg_1

type nfs

(rw,relatime,vers=3,rsize=65536,wsize=65536,namlen=255,hard,proto=tcp,timeo=600,retrans=2,sec=sys,mountaddr=192.168.5.5,mountvers=3,mountport=635,mountproto=tcp,local_lock=none,addr=192.168.5.5)

NetApp, Data ONTAP and WAFL are registered trademarks, FlexGroup is a trademark of NetApp, Inc. in the United States and other countries. All other trademarks belong to their respective owners and should be treated as such.

Generated on Wed Apr 16 21:00:05 2025 by SpecReport

Copyright © 2016-2025 Standard Performance Evaluation Corporation